Big-Data components

Hadoop

Hadoop is a JAVA based programming framework that supports the large data set processing in a distributed computing environment. It is a free software framework developed by Apache Software foundation.

Hadoop framework environment enable the system to run applications with voluminous data in thousands of nodes with thousands of terabytes. Its distributed file system facilitates rapid data transfer rates among nodes and allows the system to continue operating uninterrupted in case of a node failure. The distributed file system approach lowers the risk of catastrophic system failure, even if a significant number of nodes become inoperative.

Doug Cutting, the Hadoop creator, named the framework after his child’s stuffed toy elephant. Hadoop system is evolved over the period of time. Current Hadoop ecosystem consists of Kernal, MapReduce, the Hadoop distributed file system (HDFS), and a number of related products such as Apache Hive, HBase, PIG, Zookeeper and more.

Some of the major industry line uses Hadoop framework for their business :

- Online travel

- Mobile data

- E-commerce

- Energy discovery

- Energy savings

- Infrastructure management

- Image processing

- Fraud detection

- IT security

- Health care

Click on the image to open expanded view.

HDFS :

The Hadoop Distributed File System (HDFS) is the primary storage system used by Hadoop applications.

HDFS enables high-performance access to data across Hadoop clusters. Hadoop distributed file system has become a key tool for managing pools of Big-Data and supporting Big Data analytics. HDFS can be deployed on low-cost commodity hardware, where server failures are common. HDFS is designed to be highly fault-tolerant.

Parallel Processing: HDFS breaks the data into pieces and distribute them into multiple nodes in the cluster. While retrieving, it runs the same mechanism to send parallel task to different nodes to collect the data and combine the final result set to the requestor.

HDFS is built to support applications with large data sets, including individual files that reach into the terabytes. It uses a master/slave architecture, with each cluster consisting of a single Name Node that manages file system operations and supporting data nodes that manage data storage on individual compute nodes.

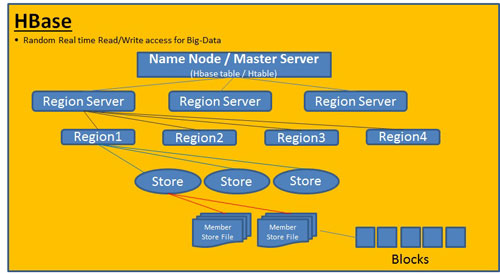

HBase

HBase is a non-relational / No SQL data base that runs on top of Hadoop Distributed File System (DHFS). It is columnar and provides fault-tolerant storage and quick access to large quantities of spare data.

HBase gives random and real time access to your Big-Data. HBase is suitable for enterprises dealing with large tables with billions of row and millions of columns.

Benefits of HBase :- Fault tolerant (Distributed data)

- Flexible data model

- real-time data lookups

- Atomic and consistent row-level operations

- Automatic sharding and load balancing

- High availability through automatic failover

- In-memory caching

- Server side processing via filters and co-processorsn

- Replication across the data center

Click on the image to open expanded view.

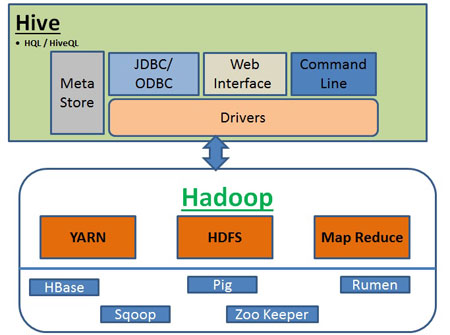

Hive

Hive is a Apache data warehouse infrastructure build on top of Hadoop.

Apache Hive provides HiveQL, a traditional SQL language to access large data sets stored in Hadoop’s HDFS infrastructures. Hive currently supports four file format Textfile, Sequencefile, ORC and RCfile.

Features of Hive:- Indexing to provide acceleration

- Meta data storage in RDBMS, support faster query executionl

- Operating on compressed data stored into Hadoop system

- Operating on compressed data stored into Hadoop system

- HiveQL - SQL support